Hi @andrewback @ricardas,

thanks for your answer. I did some experiments with the HW & a modified version of the code from my previous post.

Input:

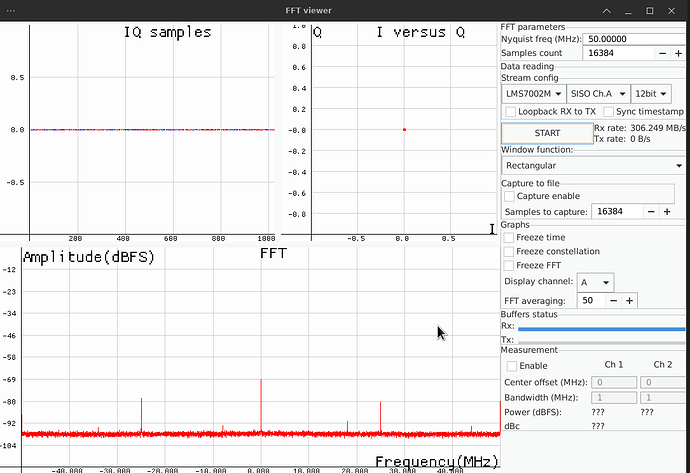

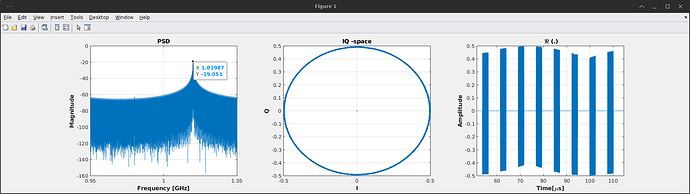

I feed the LimeSDR XTRX from the VSG60A Vector signal generator. A single sinusoid at 1025MHz with Ps =-40dBm. The LimeSDR was tuned to fc=1GHz, and the sampling rate was set to 100MSPS.

Samples collection:

See attached code. The system collects 2^16 samples (or any other number), and save the whole buffer to a file in one go. I guess this is a bare minimum, to get the samples stored to a file.

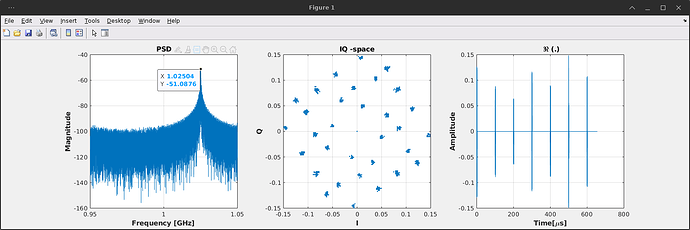

The data was imported to Matlab, and this the results:

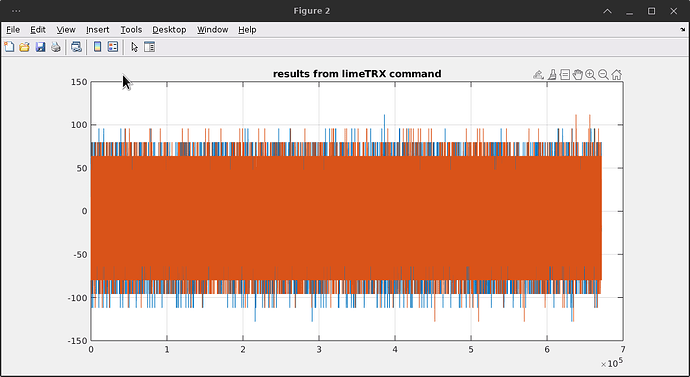

The real part (and also imaginary) of the data vector are zeros most of the time (this is within one single 65k sample buffer received from the device). Zoomed into the first part of the samples shows:

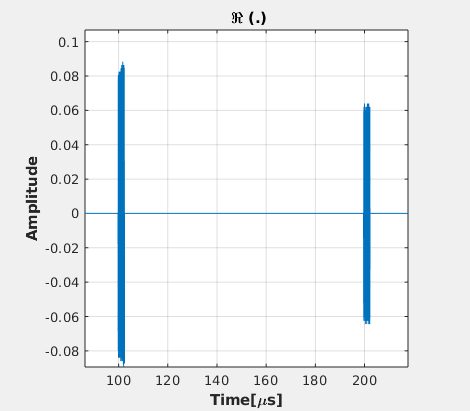

and two “bursts”:

I did experiments with 1024^2 samples, and the signal did look very similar to the 65k data vector.

I did not dive into the limeGUI source code (I am not a C++ specialist) but it seems to be working well.

Maybe there is a “magic” configuration item that I did not set correctly, and lack of a proper setup is the main source of this behaviour.

Could anybody try to run the code below on the LimeSDR XTRX and see what is

happening ?

Cheers,

Darek

The code:

#include “limesuiteng/limesuiteng.hpp”

#include

#include “common.h”

#include<stdio.h>

using namespace lime;

using namespace std::literals::string_view_literals;

double frequencyLO = 1000.0e6;

float msr_duration_in_sec = 0.5;

float sampleRate = 100.0e6;

static uint8_t chipIndex = 0; // device might have several RF chips

bool stopProgram (false);

void intHandler(int dummy)

{

std::cout << "Stopping\n"sv;

stopProgram = true;

}

static LogLevel logVerbosity = LogLevel::Verbose;

static void LogCallback(LogLevel lvl, const std::string& msg)

{

if (lvl > logVerbosity)

return;

std::cout << msg << std::endl;

}

int main(int argc, char** argv)

{

lime::registerLogHandler(LogCallback);

auto handles = DeviceRegistry::enumerate();

if (handles.size() == 0)

{

std::cout << "No devices found\n"sv;

return -1;

}

std::cout << "Devices found :"sv << std::endl;

for (size_t i = 0; i < handles.size(); i++)

std::cout << i << ": "sv << handles[i].Serialize() << std::endl;

std::cout << std::endl;

// Use first available device

SDRDevice* device = DeviceRegistry::makeDevice(handles.at(0));

if (!device)

{

std::cout << "Failed to connect to device"sv << std::endl;

return -1;

}

device->SetMessageLogCallback(LogCallback);

device->Init ();

// RF parameters

SDRConfig config;

config.channel[0].rx.enabled = true;

config.channel[0].rx.centerFrequency = frequencyLO;

config.channel[0].rx.sampleRate = sampleRate;

config.channel[0].rx.oversample = 2;

config.channel[0].rx.lpf = 0;

config.channel[0].rx.path = 2; //rxPath;

config.channel[0].rx.calibrate = false;

config.channel[0].rx.testSignal.enabled = false;

// TX is inactive

config.channel[0].tx.enabled = false;

config.channel[0].tx.sampleRate = sampleRate;

config.channel[0].tx.oversample = 2;

config.channel[0].tx.path = 0;

config.channel[0].tx.centerFrequency = frequencyLO - 1e6;

config.channel[0].tx.testSignal.enabled = false;

std::cout << "Configuring device ...\n"sv;

auto t1 = std::chrono::high_resolution_clock::now();

device->Configure(config, chipIndex);

auto t2 = std::chrono::high_resolution_clock::now ();

std::cout << "SDR configured in "sv << std::chrono::duration_cast<std::chrono::milliseconds>(t2 - t1).count() << "ms\n"sv;

FILE *file_id;

// Samples data streaming configuration

StreamConfig streamCfg;

streamCfg.channels[TRXDir::Rx] = { 0 };

streamCfg.format = DataFormat::F32;

streamCfg.linkFormat = DataFormat::I16;

std::unique_ptr<lime::RFStream> stream = device->StreamCreate (streamCfg, chipIndex);

stream->Start();

std::cout << "Stream started ...\n"sv;

signal(SIGINT, intHandler);

const unsigned int num_samples_to_read = 64 * 1024;

const unsigned int num_blocks_to_read = 10;

complex32f_t **rxSamples = new complex32f_t *[2]; // allocate two channels for simplicity

for (int i = 0; i < 2; ++i)

rxSamples[i] = new complex32f_t[num_samples_to_read];

auto startTime = std::chrono::high_resolution_clock::now();

t1 = startTime;

t2 = t1;

uint64_t totalSamplesReceived = 0;

StreamMeta rxMeta{};

StreamStats rxStreamStat;

file_id = fopen ("/dev/shm/lime_sdr_samples_fs1025MHz_fc1000MHz_100MSPS_pt_m40dBm.bin", "wb");

if (file_id == NULL)

{

printf("Cannot create the samples file");

}

size_t written_to_file;

size_t total_written_to_file = 0;

printf ("max num samples per block:%d\n", num_samples_to_read);

printf ("max num samples to collect:%d\n", num_blocks_to_read * num_samples_to_read);

int print_debug = 0;

double freq = device->GetFrequency (chipIndex, TRXDir::Rx, 0);

printf("Freq:%f [MHz]\n", freq/1.0e6);

uint32_t blk_cnt = 0;

// write the number of samples per block

written_to_file

= fwrite (&num_samples_to_read, sizeof (unsigned int), 1, file_id);

while (blk_cnt < num_blocks_to_read)

{

// read samples

uint32_t samplesRead = stream->StreamRx (rxSamples, num_samples_to_read, &rxMeta);

if (samplesRead == 0)

{

printf("--ZERO SAMPLES --\n");

continue;

}

if (print_debug == 1)

{

if ((blk_cnt % 5) == 0)

{

stream -> StreamStatus(&rxStreamStat, nullptr);

printf (" pkg overrun: %d, lost: %d, data rate:%f, late:%d, "

"packets:%ld \n ",

rxStreamStat.overrun, rxStreamStat.loss,

rxStreamStat.dataRate_Bps, rxStreamStat.late,

rxStreamStat.packets);

blk_cnt = 0;

};

};

written_to_file

= fwrite (rxSamples[0], sizeof (float), 2 * num_samples_to_read, file_id);

total_written_to_file += (sizeof(float) * written_to_file);

totalSamplesReceived += samplesRead;

blk_cnt ++;

}

printf ("Bytes written to file: %ld \n", total_written_to_file);

printf ("Total samples received: %ld \n", totalSamplesReceived);

// clean up

stream.reset ();

fclose (file_id);

DeviceRegistry::freeDevice(device);

for (int i = 0; i < 2; ++i)

delete[] rxSamples[i];

delete[] rxSamples;

return(0);

};

and the output from the program:

Devices found :

0: LimeSDR XTRX, media=PCIe, addr=/dev/limepcie0, serial=000000000e5dd88c

Configuring device …

Sampling rate set(100.000 MHz): CGEN:400.000 MHz, Decim: 2^8, Interp: 2^8

SDR configured in 190ms

/dev/limepcie0/trx0 Rx0 Setup: usePoll:1 rxSamplesInPkt:256 rxPacketsInBatch:39, DMA_ReadSize:40560, link:I16, batchSizeInTime:99.84us FS:100000000.000000

Stream started …

max num samples per block:65536

max num samples to collect:655360

Freq:999.999996 [MHz]

Bytes written to file: 5242880

Total samples received: 655360

Rx0 stop: packetsIn: 2652