Hello. I thought I’d give the limesuite xtrx drivers a try, but I’ve run into some issues. Hopefully you can let me know if what I’m trying is pointless or something I just missed.

What happens:

All SPI interactions take a long time, I presume they’re timing out and there’s not error handling yet. When stepping through any of the functions it seems like nothing is being set or read correctly. Perhaps SPI lines were routed differently on the Lime version than on the Fairwaives one?

What I’m running:

SDR: Fairwaves XTRX rev5

Host: Pi CM4 with Ubuntu 20.04.5

Gateware: LimeSDR-XTRX_GW on the branch LimeSDR-XTRX

Kernel driver: pcie_kernel_sw on the branch litepcie-update

User land driver: LimeSuite_5G on the branch litepcie-update-XTRX.

Thanks for any help. Looking forward to when everything gets released!

litepcie-update-XTRX branch is faulty, use litepcie-update branch

Thanks, that’s good to know.

So far the only combination of userland driver and gateware I’ve found that tries to do something is gateware from the master branch and driver from commit hash 4908c6e1 (right before the recent push). It doesn’t hang, but it also doesn’t stream samples. Anything more recent and it just hangs until every SPI transaction times out.

I skimmed through the hdl and microblaze changes, nothing jumped out at me that would cause this.

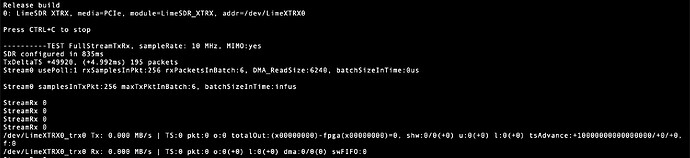

Console output with latest gateware/driver

Console output with the older ones mentioned above

Edit: tried with the latest driver and the gateware from before the pin change branch was merged in and got a little further, though it never progresses past this point

I think I figured it out both of my issues.

First: @ricardas In the constructor for LimeSDR_XTRX, the initializer for mFPGAcomms gets passed the trx pipe instead of the control pipe. So that’s why I couldn’t get past any FPGA SPI transactions.

Second: the rev5 and upcoming boards use different XO DACs. As soon as I get vitis to cooperate I’ll generate a new bitstream with the old DAC values back in.

@brainey6 Thanks for finding the issue, looks like I broke it during recent code refactor.

I switched from a pi to a different SBC and my sample streaming issue went away, so it works now! Mostly.

My issue now is that the carrier way overpowers my signal, but enabling calibration always fails with the “Loopback signal weak” error. I tried adding in code to set the gain and made sure the board was cold, but it the outcome is the same. If I skip CheckSaturationTxRx it at least finishes, but the carrier is still there. If you have any insight it’d be greatly appreciated.

Everything is pretty much working now except for one thing which breaks any realtime use.

There’s a massive delay every packetsToBatch*batchSize samples read. Let’s say I use a sampling rate of 20MSPS, 6 packetsToBatch, and 256 batchSize. I can read 5 packets without delay, but that last packet causes a 16ms delay. If I only read one sample at a time with StreamRx, I can read 1535 samples just fine but that last one causes a 16ms delay. The delay also scales linearly when changing any of the three variables mentioned. Loss and overflow also scale the same way.

@ricardas Any thoughts on this?

It’s hard to say what’s going on there without more information. Definitely there should not be such delay.

But assuming:

- you’re running on “Host: Pi CM4 with Ubuntu 20.04.5”

- your’re losing data “Loss and overflow also scale the same way”

- your’re using MIMO

If you’re having data loss, that means the CPU can’t process the data fast enough, also don’t know what you’re doing to process it.

I don’t have CM4 on hand right now to retest, but the last time I was using it the situation was like this:

- MIMO vs SISO: when using MIMO the samples data needs to be deinterleaved, so it uses more CPU and less efficient memory access. Therefore MIMO results in samples deinterleaving 5x slower than using SISO.

- I don’t remember exact numbers, but with CM4 MIMO 5MSPS was fine, 10MSPS was on the edge, if the system would run some extra task, it would cause a data loss. (you’re running Ubuntu, so there might be a lot of unnecessary background tasks waking up, creating interruptions). SISO was a lot better, I think 20MSPS was running fine, but it depended on what additional processing was being done with the data.

- I was running Raspbian OS with Desktop disabled, because graphical interface was consuming noticable CPU time, and if interacted would also cause spike in CPU usage, provoking samples loss.

So I’d suggest try using 5MSPS MIMO, or 10MSPS SISO.

I don’t think it’s down to hardware speed. When running at 10MSPS SISO all cores stay under 10% utilization. I’m using the rfTest application, so no processing is being done after reading in samples.

The things that I find confusing:

- The delayed readout only happens on the batched packets boundary. I.e., can read 1535 samples fine but that last 1 will take a few milliseconds. Almost like it’s only reading one batch at a time and waiting or something.

- The delay gets bigger as the sample rate decreases

Some outputs at various sampling rates. rxPacketsInBatch is 6 and rxSamplesInPacket is 256.

-

10 MSPS

- Read time is 39ms

- /dev/LimeXTRX0_trx0 Rx: 39.817 MB/s | TS:103022592 pkt:1572 o:262(+25) l:262(+25) dma:1536/1536(0) swFIFO:0

-

20 MSPS

- Read time is 19ms

- /dev/LimeXTRX0_trx0 Rx: 81.307 MB/s | TS:39714816 pkt:606 o:101(+51) l:101(+51) dma:25856/26112(256) swFIFO:0

-

30 MSPS

- Read time is 13ms

- /dev/LimeXTRX0_trx0 Rx: 121.906 MB/s | TS:703070208 pkt:10722 o:1787(+77) l:1787(+77) dma:64512/64512(0) swFIFO:0

-

40 MSPS

- Read time is 9ms

- /dev/LimeXTRX0_trx0 Rx: 162.614 MB/s | TS:602013696 pkt:9186 o:1531(+102) l:1531(+102) dma:64256/64512(256) swFIFO:0

Well, figured out why the delay is inversely proportional to sampling rate.

10msps - “Loss: pkt:192 exp: 25165824, got: 25557504, diff: 391680”

20msps - “Loss: pkt:339 exp: 44433408, got: 44825088, diff: 391680”

30msps - “Loss: pkt:330 exp: 43253760, got: 43645440, diff: 391680”

40msps - “Loss: pkt:759 exp: 99483648, got: 99875328, diff: 391680”

That’s with rxPacketsInBatch=6 and rxSamplesInPacket=512. With defaults the diff is 261120.

Ok, so this shows that the DMA engine is producing data faster than the CPU is consuming, that’s why there is overflows and the samples timestamp jumps. The low CPU usage is suspicious, it’s possible that the CPU is sleeping too long.

Things to check:

- Rx thread is set to use REALTIME scheduling TRXLooper.cpp#L509-514, which depending on linux kernel settings can result in RT throttling (should warn about that in syslog), if the thread spends too much time working. This should not happen as data transfer Interrupts has been implemented, so the thread sleeps while there is no data, and RT throttling is avoided.

- Add extra config to stream rfTest.cpp#L70 to try smaller batches. Or to disable poll, so the thread would not sleep and constantly read DMA counters.

stream.extra = new SDRDevice::StreamConfig::Extras();

stream.extra->usePoll = false; // Might provoke RT throttling, thread scheduling should be changed to DEFAULT.

stream.extra->rxSamplesInPacket = 256;

stream.extra->rxPacketsInBatch = 2;

- Missed Interrupts, very unlikely. If interrupt is missed than the Rx thread would not wake up in time to process the data, but even then it shoud not result in data overflow. You can enable kernel module interrupt debug messages main.c#L37 to see in the system log if they are being received consistently.

There is 256 DMA buffers ring queue, TRXLooper_PCIE.cpp#L685 irqPeriod specifies after how many buffers the interrupt should be triggered. Each buffer is filled with rxPacketsInBatch*rxSamplesInPacket samples. Generally for XTRX the buffer should be less than 8KB.

Ah I just realized I forgot to update this thread.

Turns out… I was using mismatched branches between kernel and user driver, so the structs didn’t line up. The kernel driver was reading 0 for number of IRQ, so the only time the IRQ would go off was when all of the buffers had been filled. The PCI driver logging definitely came in handy tracking that down.

Pretty happy with performance. Nice clean signal except for the carrier leakage, which is ~10db higher than the signal.

I am just trying to get the xtrx installed so limesuite can even see it. I have several xtrx from fairwaves rev3 to rev5. can someone direct me to a way I can get limesuite to even see the xtrx. from there I ccan goto gr-limesdr

1 Like

I have some questions about this version of LimeSuite: I’m trying to use XTRX (rev.5) for openAirInterface (OAI). However, I noticed that the functions have been renamed (or completely restructured). Investigating a little further, I saw that the headers that were previously included in /usr/local/include/lime have changed, which makes it impossible to use the old functions in the OAI (these functions are in LimeSuite.h). Another solution would be to use SoapySDR, but it also seems to be having problems. My questions are:

1 - Is it still possible to somehow use the LimeSuite.h functions in OAI (i.e. have they been updated as well)? Or would I have to readjust the code to comply with the new functions?

2 - Are there any plans to integrate SoapySDR?

There will be a new major version of the Lime Suite API, which at the present time is all that can be used with XTRX, but a legacy wrapper will be provided for the old API. However, my understanding is that this is not available yet, so if you are experimenting with the new gateware on a Fairwaves XTRX and the new branch of Lime Suite, you have no option but to modify applications to use the new API.

Thank you for the quick replay, @andrewback. I was expecting that, so I was already going along with it. I have some problemas trying to run with the new API. The main one was the error

Rx ch0 filter calibration failed: SXR tune failed

when I tried to configure the bandwidth with these lines of code, for example:

[...]

config.channel[0].rx.lpf = 5e6;

config.channel[0].tx.lpf = 5e6;

[...]

xtrx_device->Configure(config, 0);

Also I don’t know how to setup the gain (I saw some “TODO” in the code referring to this, maybe it hasn’t been implemented yet).

I’m afraid I can’t help, but @ricardas may be able to.

1 Like

Hi, I just fixed the calibration error issue.

I’m already also working on OAI integration, but the code is not yet published. Can’t add it to limesuite repo, as the OAI’s plugin header requires bunch of other their internal headers.

And since the limesuite is still work in progress I don’t want to push the plugin to OAI repo as that would guarantee breakages. I could share a patch of the plugin if you need it.

Gains API are not implemented yet, as there are multiple stages that are not linearly dependent, so need to think of a way to generalize it into a common API that could work with different devices. So right now from code’s perspective, gain is set by retrieving LMS7002M object from the SDRDevice and manually modifying desired gain parameters.

The LimeSuite.h C API is currently not connected to the new implementation, so it’s not usable right now. I do intend to make it usable for backwards compatibility, but that’s not guaranteed and low priority.

SoapySDR integration will be updated later.

1 Like

Hi @ricardas! Thank you for solving the calibration problem!

That’s great to hear! if you could send me this patch for the OAI I would appreciate it!!

And thanks for the development, I’ve been following the repository every day and it’s great to see that you’re working on it every day!

@mateusm

I have a fork of srsRAN where I added support for XTRX (link here), it’s actually what I was running in my screenshots above. The version of srsRAN could use updating, and the API version I wrote it against is also out of date, but it should still work as is. I’ll probably be updating it in the next couple of weeks.

I had to use the lime gui (can’t remember what it’s called) to adjust the DC compensation, but it sounds like the calibration is fixed now, so you should be able to just add txcalibration and rxcalibration to the args.

1 Like