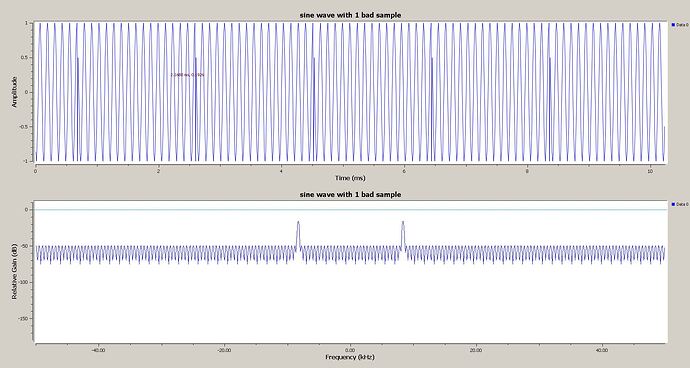

(I’m not a Lime developer but) It happens with all SDR hardware, when you retune the frequency, it is caused by invalid input and discontinuities in the samples. For simplicity imagine the samples from a a single real ADC are 12,13,15,17,18 (and then you retune the frequency), there is a delay while the PLL(s) lock from the old to the new frequency (during which time the ADC is not sampling a valid input), then due to the change in frequency the samples could be 128,130,131,133, … The invalid samples and that discontinuity in the time domain may manifest as a temporary raise in the noise floor across all frequencies in the frequency domain, resulting in a loud click or pop.

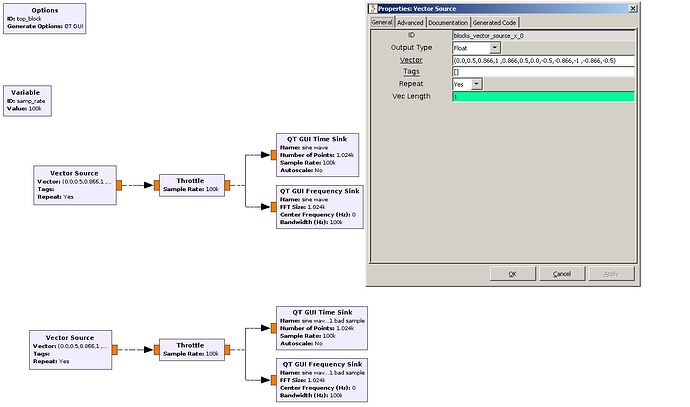

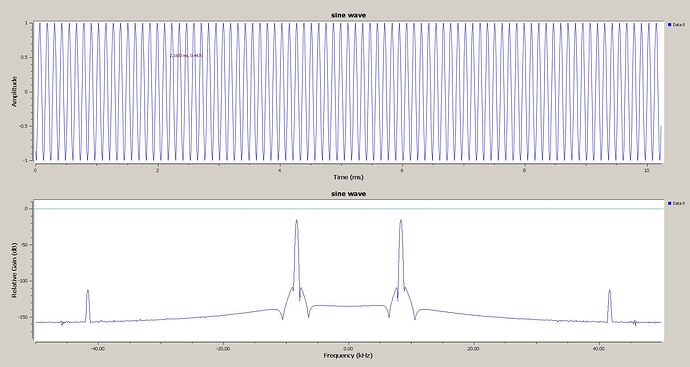

The sine wave vector is just the values of sine(x), where x is every 30 degrees until it repeats. e.g. (0.0,0.5,0.866,1 ,0.866,0.5,0.0,-0.5,-0.866,-1 ,-0.866,-0.5) In the one bad sample vector I changed 1 value in the valid sine wave and then I padded out the vector with 15 other valid sine wave vectors (I should have used more valid sine wave), it is not perfect to show the effect, but it is good enough (you are seeing far too much of the actual signal, and not enough noise).

What some software does when you tell it to return, it slowly attenuates the incoming samples to zero, then zero pads it during the retune, and then after it confirms all the PLL’s(Phase Locked Loop) have locked to the new frequency and that the ADC is returning valid samples, it slowly ramps back up the signal level from zero to avoid any discontinuity.

What you may be able to do with the LimeSDR is retune digitally on the TSP and avoid some delay and discontinuity, provided the analogue RX mixer does not need to be retuned to track the signal.