Hi,

I’m using SoapySDR to transmit a QPSK signal. The symbol rate of the generated signal is 3.375 kHz (6.75 kbps data rate), RRC filtered (r = 0.6) with 8 sps to 27 kHz, then resampled x186 to 5.022 MHz before being sent to the SDR using CF32 format through SoapySDR. The RX and TX sample rates are both set to 5.022 MHz and for my tests I’m using the ISM band at 914 MHz with rather well adapted antennas (verified with a Nano-VNA to have ~1.4 VSWR – best I could get with those antennas).

When using the USRP B200, I get a nice signal with a signal bandwidth of 5.4 kHz (Fsym*(1+r)), measured with a spectrum analyzer and an RTL-SDR. I’m also able to decode the signal I’m sending.

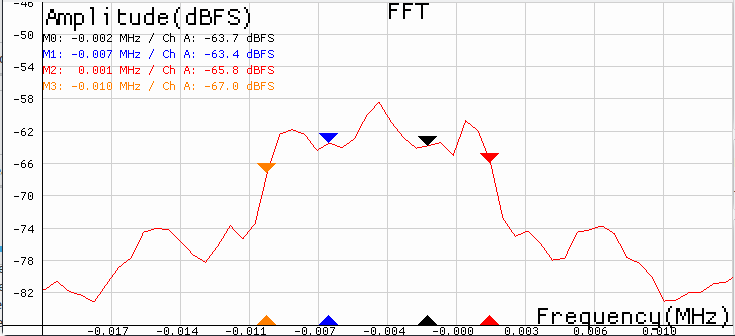

When I use the LimeSDR-USB, the same signal has a bandwidth of 10.8 kHz, measured with the same RTL-SDR (I do not have access to the SA anymore, but I trust this measurement as other known signals look just fine). In order to get the right signal, I need to send twice as many samples as for the B200 for the same sample rate (or half the sample rate but send as many samples), which just makes no sense.

A data burst of 120 ms at a symbol rate of 3.375 kHz is 405 symbols, which then resampled to 5.022 MHz should be 602640 samples, which is exactly the number of samples I get after resampling, and the number of samples I send to the SDR.

I tried exporting the signal to a ‘cfile’ (which really is just complex written in a binary file) and playing it back in GNU Radio Companion. The signal that is sent to the LimeSDR, which gives me 10.8 kHz over the air, is measured at 5.4 kHz of bandwidth in GRC. Even retransmitting the recorded signal through GRC (which uses UHD) into the LimeSDR requires me to set the sample rate to half what it really is (double the number of samples sent per what it really is).

I also tried to play around with the driver source code, and SoapySDR source code, but to no avail, I could not find anything regarding this issue. I did try the latest tagged versions and the master branches of every library I use.

I cannot share code as it is proprietary (code is for one of my clients), but I can answer any question. I might be able to share the generated signal, as this is what’s being sent over the air anyways.

I am on Ubuntu 18.04 LTS with all of the latest updates. Tried USB 2.0 and 3.0 with no change. I don’t have access to any other device that can transmit (social distancing / work from home), but it was confirmed working on a USRP B200 (confirmed by myself).

Thanks,

JD