I’m getting inconsistency issues testing my LimeSDR USB in GRC. The alignment of the A and B channel in MIMO mode change with every run and occasionally I get time-domain spurs. My test produces a cosine from the outputs and SMA connectors loop the output back into the respective inputs.

For reference:

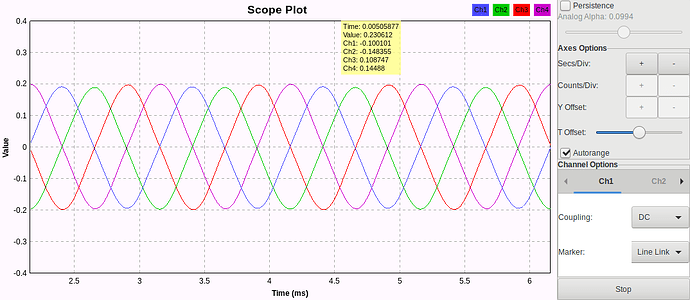

Ch1: A inphase

Ch2: A quadrature

Ch3: B inphase

Ch4: B quadrature

The first run is good:

The second run is out of alignment:

The third is… Jumpy:

Here is the graph I am using:

THE WHOLE STORY:

I’m writing data radio software which accesses the LMS API using JNA. Using SMA real-world loopbacks to see if it can talk to itself (I already tested the software using simulated channels in the software domain). Cables were used for loopback to eliminate the possibility of incorrect use of the internal loopback functions of the LimeSDR. The software was reporting errors indicating that the A and B channels were out of alignment, and even when they were in alignment, it had to do a lot of error correction to parse a frame.

Another round of integration tests confirmed that alignment between A and B was not consistent. Considering lag time between sending to A and B could cause shifting, use of the timestamp scheduling feature in LMS_SendStream, by taking the timestamp passed by stream status and adding some number like 1024*64, the test sines being sent (not WFM) ended up being garbage instead.

… considering that this may be programmer error on my part I wrote the graph above to test and found even more problems.

Explanation for the issues in GRC? Tips for syncing the streams when using the LMS API?

UPDATE: After looking at this post Trying higher sampling rates cleared the problem up. When lowering the cosine frequency to 100Hz the problem returned, so this may be caused by the DC offset corrector. The OP in that post was using a 100khz tone for testing. That’s got to be the loosest definition of ‘DC’ I’ve ever seen. What’s bothersome about this is that any spurious carrier near the zero frequency could cause this to occur if DC correction is on (if that’s what is causing it) and splatter harmonics all over the baseband!