Hello all, I’ve recently got my hands on a LimeSDR USB and have been excited to do some testing with it. But I’m running into some weird issues.

I am using a MixNV as a signal generator at 500MHz and around -8dBm. I’ve tested it with a spectrum analyzer to make sure the output is correct, and it is. This generated signal is going directly to the RX.1L input on the LimeSDR.

I am using this python script to stream data and save one buffer worth of data to a file, you can see all of the parameters I’ve tuned the LimeSDR to at the beginning of the file:

I am then using GnuRadio to read this file and show it in a QT GUI Sink:

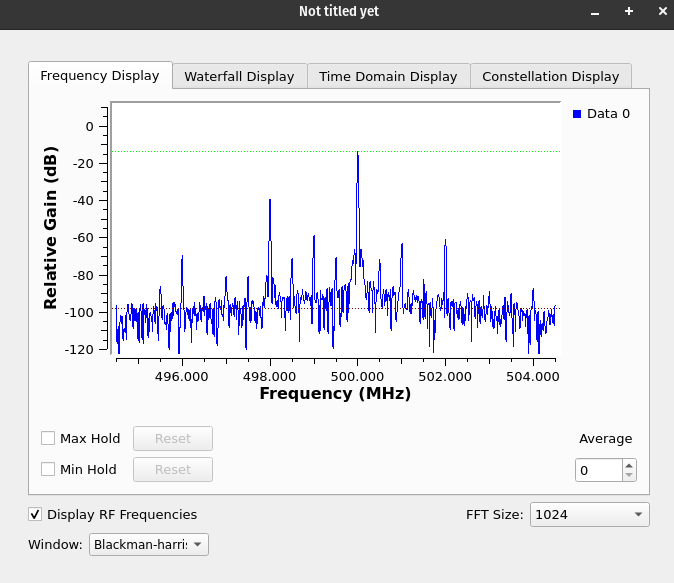

However, upon taking a quick look at this file, I notice that a lot of extra signal peaks are appearing. My SDR is tuned to 499.5MHz and my signal coming in is at 500MHz so everything else shouldn’t be there:

Notice all of the extra signals?

Now at first I thought something was wrong with the way I’ve set up everything. Just as a sanity check, I grabbed my old trusty RTL-SDR and gave it a go with the same script (adjusting sample rate and BW as necessary since it’s a weak device), here are the results from that using the same parameters as before:

I’ve found that using attenuators and reducing the output of my siggen to about -63dBm, the extra peaks go away.

I also have an XTRX handy (Uses the same LMS7002M chip), and the results from the XTRX and LimeSDR are near exactly the same so it’s not a fluke with just my hardware.

Does anyone have any advice?